Patient (Cyber) Safety

I’m writing this week’s newsletter from one of the reading rooms at the British Library. (I promise this is relevant to the topic.) Despite Prince/King Charles’ opinion that they feel like assembly halls of an academy for secret police (does he know something we don’t?) the reading rooms are actually elegant, quiet, modernist spaces with more built-in desks than people. For my purposes, they’re a respite from the crowded, noisier public spaces throughout the rest of the library.

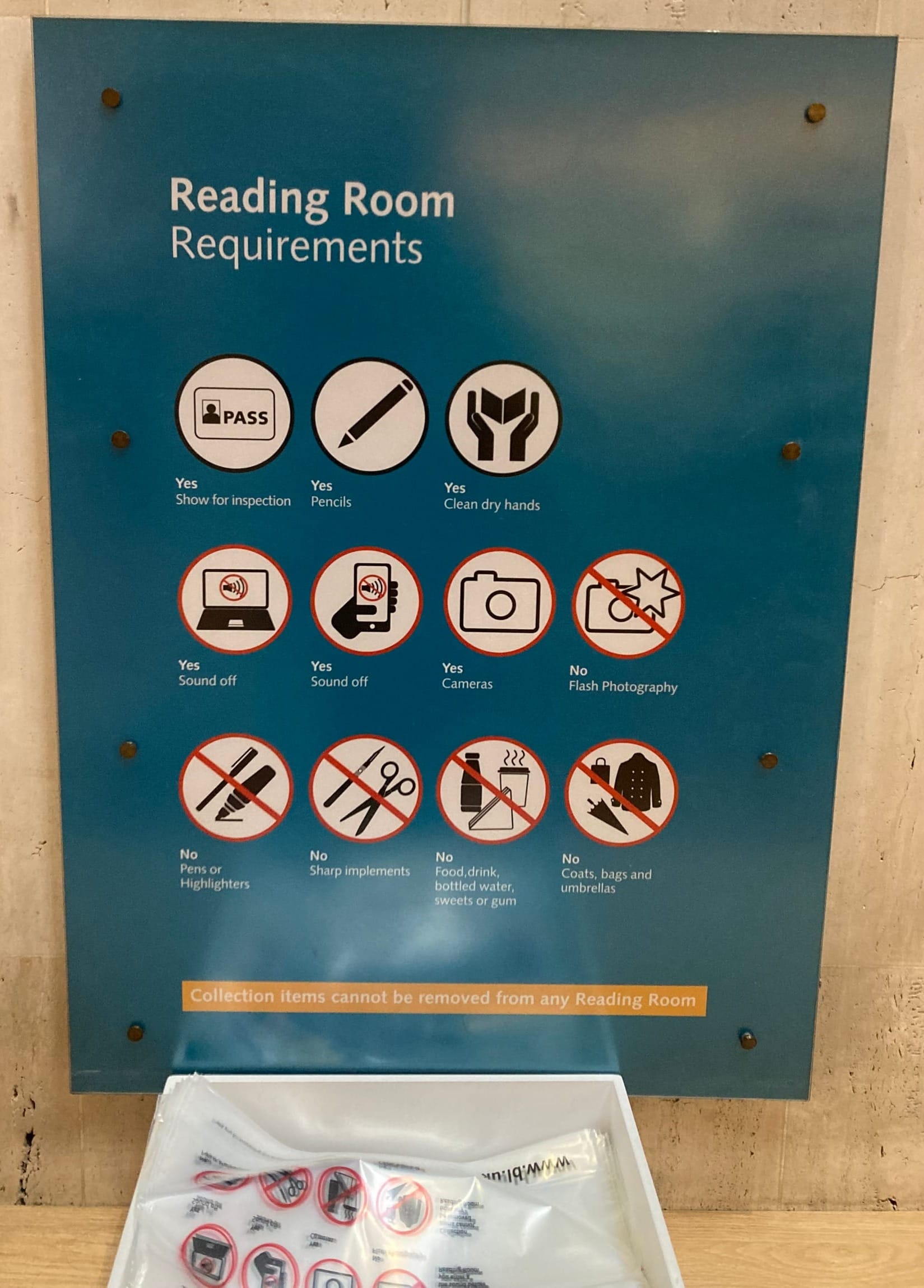

To use one of the reading rooms, though, you have to follow a number of rules.

First, you have to obtain a Reader Pass, which requires both photo id and proof of UK residency. Having accomplished that, you can head down to the locker room in the basement to store your coat, backpack, bike helmet, food, water bottle, and any other personal items. Oh, and pens as well. Pencils are allowed in the reading rooms, but no pens. The locker room supplies clear plastic bags for you to carry your laptop, notebook, and pencil up to the any of the 4 multi-level reading rooms. Staff at the entrance of each room examine your pass (along with your photo ID if you’re visiting one of the more sensitive areas such as the Rare Books room). Later, when exiting the room, they might ask you to open up your laptop to prove that you’re not hiding anything (such as, say, a page sliced out out of a valuable medieval illuminated manuscript).

Why are they so strict about security? It’s all about protecting their collections against theft and damage. These are not just theoretic concerns. Libraries with rare books have long been targets for thieves. (Even the blank pages in old books are sometimes stolen, specifically by document forgers.)

But there’s a twist to my story: The only form of British Library Reader Pass currently available is a three month temporary pass which allows entry into the reading rooms for personal study, but not access to the library’s books or other holdings. Why? Because a cyberattack in October 2023 destroyed much of library’s IT infrastructure, and it is still being re-built.

I find it ironic, but not surprising, that a library could have such an elaborate physical security system, yet fail at cybersecurity. Cybersecurity is hard, and a lot of organisations are still learning how to do it well. (The library recently published a report on their own “Learning lessons” from the incident.)

I tell this story because I fear we’re seeing something analogous playing out in healthcare. Hospitals and health systems have gotten much better at patient safety over recent decades, but they’re struggling when it comes to patient cyber-safety.

I’m old enough to remember when Lucian Leape published his article Error in Medicine in JAMA in 1994, helping kick off the patient safety movement. Later, in 2000, came the Institute of Medicine report To Err is Human including the famous quote about medical errors killing a jumbo jet worth of people each day. Medical news from that era was full of stories of surgeons operating on the wrong side of a body because someone prepped the wrong knee, or hung a CT film backwards on the light box. There were lots of stories of medication errors, too, like the tragedy of Betsy Lehman, the Boston Globe healthcare journalist killed by a 10-fold overdose of a chemotherapy drug after a nurse miscalculated the dose. These types of stories seem less frequent today, and I personally credit hospitals for improving their processes to reduce serious errors.

So what about cyber safety? It seems that a major healthcare ransomware event is reported every couple of weeks. The latest one involves Optum’s Change [for the worse] Healthcare which processes roughly a third of the country’s health insurance claims. Smaller clinics, independent pharmacies, and others with less of a financial cushion have been been hit particularly hard by the inability to process their huge daily loads of claims. A particular concern involving Change was the apparent payment of a $22M ransom by United Health-owned Optum, which promises to both fund and encourage future attacks. (The British Library, in contrast, was very clear from the outset that they never pay ransoms.)

Hospitals get hit as well, such as this recent attack on a children’s hospital.

Cybercrime harms patients in a number of ways. Most immediately, operational and information-flow disruptions delay care and increase the likelihood of medical errors. Second, hackers exploit the stolen data. Medical data is reportedly one of the most valuable categories of black market personal data. One theory is the high price comes from the potential to use it for blackmail and other forms of extortion. (Senator, how much would you pay me to not release your history of sexually-transmitted diseases to the press? How about your abortion?)

There's also a larger, more insidious problem in patient cyber-safety. This one has nothing to do with organised crime (at least, not the way people usually use that term). Hospitals, pharmacies, and (some) commercial laboratories routinely sell de-identified medical records to pharmaceutical and other companies. Much of this flows through data brokers such as IQVIA (specifically their IMS Health division) and Truveta (a joint venture of a number of large not-for-profit health systems). A longer list of health data brokers can be found on this website, along with brief explanations of what buyers do with the data.

Over the past 3 years I’ve led a research project in which colleagues from the American Medical Informatics Association and I interviewed healthcare executives about how their institutions provide data to 3rd parties for nonclinical, non-legally-mandated purposes. Our journal manuscript isn't yet published, so I can’t yet link to it, but one of our findings was that healthcare leaders tend to justify data “sharing” in two ways. One is that in most cases, data is stripped of identifiers prior to sharing/sale. Unfortunately, while this satisfies US privacy law (click here for my recent OpEd and video interview on the need for HIPAA reform), it doesn’t prevent anyone from cross-referencing the data against other data sets to re-identify it. Which is quite easy for companies to do. The other justification is that healthcare data is valuable for academic research purposes. A justification that I would find compelling if only these institutions were fastidious about policing the downstream uses of their data, in particular preventing commercial uses.

Which brings me to the last item in this newsletter, a NYTimes story on car manufacturers selling data from on-board sensors (including data on hard acceleration, hard braking, and other data that could represent aggressive driving) to insurance companies without the knowledge of car buyers. If car insurance companies are buying this kind of data from GE and Honda for either customer selection or rate-setting, what do you think life insurers and long-term care insurers are doing vis-a-vis healthcare data brokers? How about corporate recruiters interested in filtering out candidates based on their mental health histories? The list of potential abuses is long, and current protections are weak.

Bottom line: It’s time to expand the definition of patient safety to include cyber-safety. Actually, “digital safety” might be a better term, because the bad guys here aren’t just Russian/Chinese/North Korean hacking gangs. They also likely include your local hospital, in cahoots with a huge data brokering industry, along with eager corporate buyers looking to exploit your private data for their own profits.